Introduction

The Android platform provides several sensors that enable your app to monitor the motion or position of a device, in addition to other sensors such as the light sensor.

Motion sensors such as the accelerometer or gyroscope are useful for monitoring device movement such as tilt, shake, rotation, or swing. Position sensors are useful for determining a device's physical position in relation to the Earth. For example, you can use a device's geomagnetic field sensor to determine its position relative to the magnetic north pole.

A common use of motion and position sensors, especially for games, is to determine the orientation of the device, that is, the device's bearing (north/south/east/west) and tilt. For example, a driving game could allow the user to control acceleration with a forward tilt or backward tilt, and control steering with a left tilt or right tilt.

Early versions of Android included an explicit sensor type for orientation ( Sensor.TYPE_ORIENTATION). The orientation sensor was software-only, and it combined data from other sensors to determine bearing and tilt for the device. Because of problems with the accuracy of the algorithm, this sensor type was deprecated in API 8 and may be unavailable in current devices. The recommended way to determine device orientation involves using both the accelerometer and geomagnetic field sensor and several methods in the SensorManager class. These sensors are common, even on older devices. This is the process you learn in this practical.

What you should already know

You should be familiar with:

- Creating, building, and running apps in Android Studio.

- Using the Android sensor framework to gain access to available sensors on the device, and to register and unregister listeners for those sensors.

- Using the

onSensorChanged()method from theSensorEventListenerinterface to handle changes to sensor data.

What you'll learn

- What the accelerometer and magnetometer sensors do.

- The differences between the device-coordinate system and the Earth coordinate system, and which sensors use which systems.

- Orientation angles (azimuth, pitch, roll), and how they relate to other coordinate systems.

- How to use methods from the sensor manager to get the device orientation angles.

- How activity rotation (portrait or landscape) affects sensor input.

What you'll do

- Download and explore a starter app.

- Get the orientation angles from the accelerometer and magnetometer, and update text views in the activity to display those values.

- Change the color of a shape drawable to indicate which edge of the device is tilted up.

- Handle changes to the sensor data when the device is rotated from portrait to landscape.

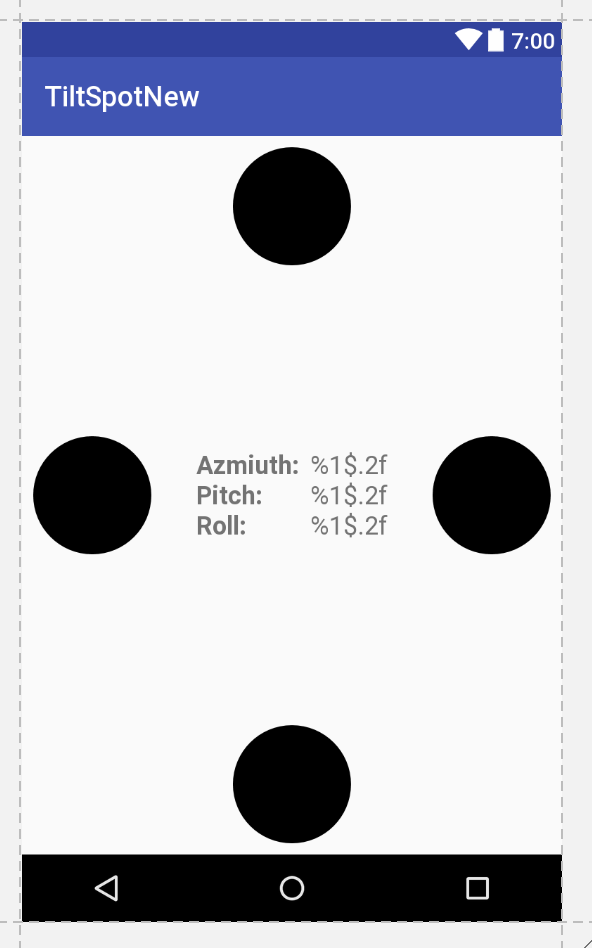

The TiltSpot app displays the device orientation angles as numbers and as colored spots along the four edges of the device screen. There are three components to device orientation:

- Azimuth: The direction (north/south/east/west) the device is pointing. 0 is magnetic north.

- Pitch: The top-to-bottom tilt of the device. 0 is flat.

- Roll: The left-to-right tilt of the device. 0 is flat.

When you tilt the device, the spots along the edges that are tilted up become darker.

In this task you download and open the starter app for the project and explore the layout and activity code. Then you implement the onSensorChanged() method to get data from the sensors, convert that data into orientation angles, and report updates to sensor data in several text views.

1.1 Download and explore the starter app

- Download the TiltSpot_start app and open it in Android Studio.

- Open

res/layout/activity_main.xml.

The initial layout for the TiltSpot app includes several text views to display the device orientation angles (azimuth, pitch, and roll)—you learn more about how these angles work later in the practical. All those textviews are nested inside their own constraint layout to center them both horizontally and vertically within the activity. You need the nested constraint layout because later in the practical you add the spots around the edges of the screen and around this inner text view.

- Open

MainActivity.

MainActivity in this starter app contains much of the skeleton code for managing sensors and sensor data as you learned about in the last practical.

- Examine the

onCreate()method.

This method gets an instance of the SensorManager service, and then uses the getDefaultSensor() method to retrieve specific sensors. In this app those sensors are the accelerometer ( Sensor.TYPE_ACCELEROMETER) and the magnetometer ( Sensor.TYPE_MAGNETIC_FIELD).

The accelerometer measures acceleration forces on the device; that is, it measures how fast the device is accelerating, and in which direction. Acceleration force includes the force of gravity. The accelerometer is sensitive, so even when you think you're holding the device still or leaving it motionless on a table, the accelerometer is recording minute forces, either from gravity or from the environment. This makes the data generated by the accelerometer very "noisy."

The magnetometer, also known as the geomagnetic field sensor, measures the strength of magnetic fields around the device, including Earth's magnetic field. You can use the magnetometer to find the device's position with respect to the external world. However, magnetic fields can also be generated by other devices in the vicinity, by external factors such as your location on Earth (because the magnetic field is weaker toward the equator), or even by solar winds.

Neither the accelerometer nor the magnetometer alone can determine device tilt or orientation. However, the Android sensor framework can combine data from both sensors to get a fairly accurate device orientation—accurate enough for the purposes of this app, at least.

- At the top of

onCreate(), note this line:

setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_PORTRAIT);This line locks the activity in portrait mode, to prevent the app from automatically rotating the activity as you tilt the device. Activity rotation and sensor data can interact in unexpected ways. Later in the practical, you explicitly handle sensor data changes in your app in response to activity rotation, and remove this line.

- Examine the

onStart()andonStop()methods. TheonStart()method registers the listeners for the accelerometer and magnetometer, and theonStop()method unregisters them. - Examine

onSensorChanged()andonAccuracyChanged(). These are the methods from theSensorEventListenerinterface that you have to implement. TheonAccuracyChanged()method is empty because it is unused in this class. You implementonSensorChanged()in the next task.

1.2 Get sensor data and calculate orientation angles

In this task you implement the onSensorChanged() method to get raw sensor data, use methods from the SensorManager to convert that data to device orientation angles, and update the text views with those values.

- Open

MainActivity. - Add member variables to hold copies of the accelerometer and magnetometer data.

private float[] mAccelerometerData = new float[3];

private float[] mMagnetometerData = new float[3];When a sensor event occurs, both the accelerometer and the magnetometer produce arrays of floating-point values representing points on the x-axis, y-axis, and z-axis of the device's coordinate system. You will combine the data from both these sensors, and over several calls to onSensorChanged(), so you need to retain a copy of this data each time it changes.

- Scroll down to the

onSensorChanged()method. Add a line to get the sensor type from the sensor event object:

int sensorType = sensorEvent.sensor.getType();- Add tests for the accelerometer and magnetometer sensor types, and clone the event data into the appropriate member variables:

switch (sensorType) {

case Sensor.TYPE_ACCELEROMETER:

mAccelerometerData = sensorEvent.values.clone();

break;

case Sensor.TYPE_MAGNETIC_FIELD:

mMagnetometerData = sensorEvent.values.clone();

break;

default:

return;

}You use the clone() method to explicitly make a copy of the data in the values array. The SensorEvent object (and the array of values it contains) is reused across calls to onSensorChanged(). Cloning those values prevents the data you're currently interested in from being changed by more recent data before you're done with it.

- After the

switchstatement, use theSensorManager.getRotationMatrix()method to generate a rotation matrix (explained below) from the raw accelerometer and magnetometer data. The matrix is used in the next step to get the device orientation, which is what you're really interested in.

float[] rotationMatrix = new float[9];

boolean rotationOK = SensorManager.getRotationMatrix(rotationMatrix,

null, mAccelerometerData, mMagnetometerData);A rotation matrix is a linear algebra term that translates the sensor data from one coordinate system to another—in this case, from the device's coordinate system to the Earth's coordinate system. That matrix is an array of nine float values, because each point (on all three axes) is expressed as a 3D vector.

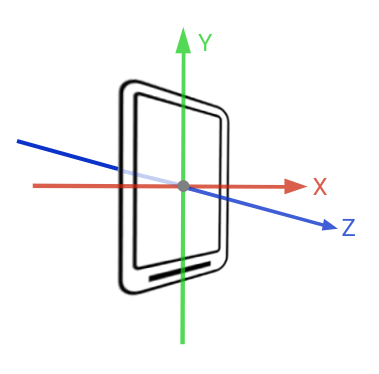

The device-coordinate system is a standard 3-axis (x, y, z) coordinate system relative to the device's screen when it is held in the default or natural orientation. Most sensors use this coordinate system. In this orientation:

- The x-axis is horizontal and points to the right edge of the device.

- The y-axis is vertical and points to the top edge of the device.

- The z-axis extends up from the surface of the screen. Negative z values are behind the screen.

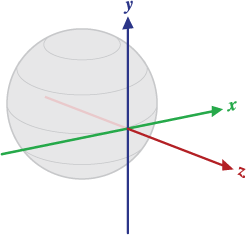

The Earth's coordinate system is also a 3-axis system, but relative to the surface of the Earth itself. In the Earth's coordinate system:

- The y-axis points to magnetic north along the surface of the Earth.

- The x-axis is 90 degrees from y, pointing approximately east.

- The z-axis extends up into space. Negative z extends down into the ground.

A reference to the array for the rotation matrix is passed into the getRotationMatrix() method and modified in place. The second argument to getRotationMatrix() is an inclination matrix, which you don't need for this app. You can use null for this argument.

The getRotationMatrix() method returns a boolean (the rotationOK variable), which is true if the rotation was successful. The boolean might be false if the device is free-falling (meaning that the force of gravity is close to 0), or if the device is pointed very close to magnetic north. The incoming sensor data is unreliable in these cases, and the matrix can't be calculated. Although the boolean value is almost always true, it's good practice to check that value anyhow.

- Call the

SensorManager.getOrientation()method to get the orientation angles from the rotation matrix. As withgetRotationMatrix(), the array offloatvalues containing those angles is supplied to thegetOrientation()method and modified in place.

float orientationValues[] = new float[3];

if (rotationOK) {

SensorManager.getOrientation(rotationMatrix, orientationValues);

}The angles returned by the getOrientation() method describe how far the device is oriented or tilted with respect to the Earth's coordinate system. There are three components to orientation:

- Azimuth: The direction (north/south/east/west) the device is pointing. 0 is magnetic north.

- Pitch: The top-to-bottom tilt of the device. 0 is flat.

- Roll: The left-to-right tilt of the device. 0 is flat.

All three angles are measured in radians, and range from -π (-3.141) to π.

- Create variables for azimuth, pitch, and roll, to contain each component of the

orientationValuesarray. You adjust this data later in the practical, which is why it is helpful to have these separate variables.

float azimuth = orientationValues[0];

float pitch = orientationValues[1];

float roll = orientationValues[2];- Get the placeholder strings, from the resources, fill the placeholder strings with the orientation angles and update all the text views.

mTextSensorAzimuth.setText(getResources().getString(

R.string.value_format, azimuth));

mTextSensorPitch.setText(getResources().getString(

R.string.value_format, pitch));

mTextSensorRoll.setText(getResources().getString(

R.string.value_format, roll));The string (value_format in strings.xml) contains placeholder code ("%1$.2f") that formats the incoming floating-point value to two decimal places.

<string name="value_format">%1$.2f</string>1.3 Build and run the app

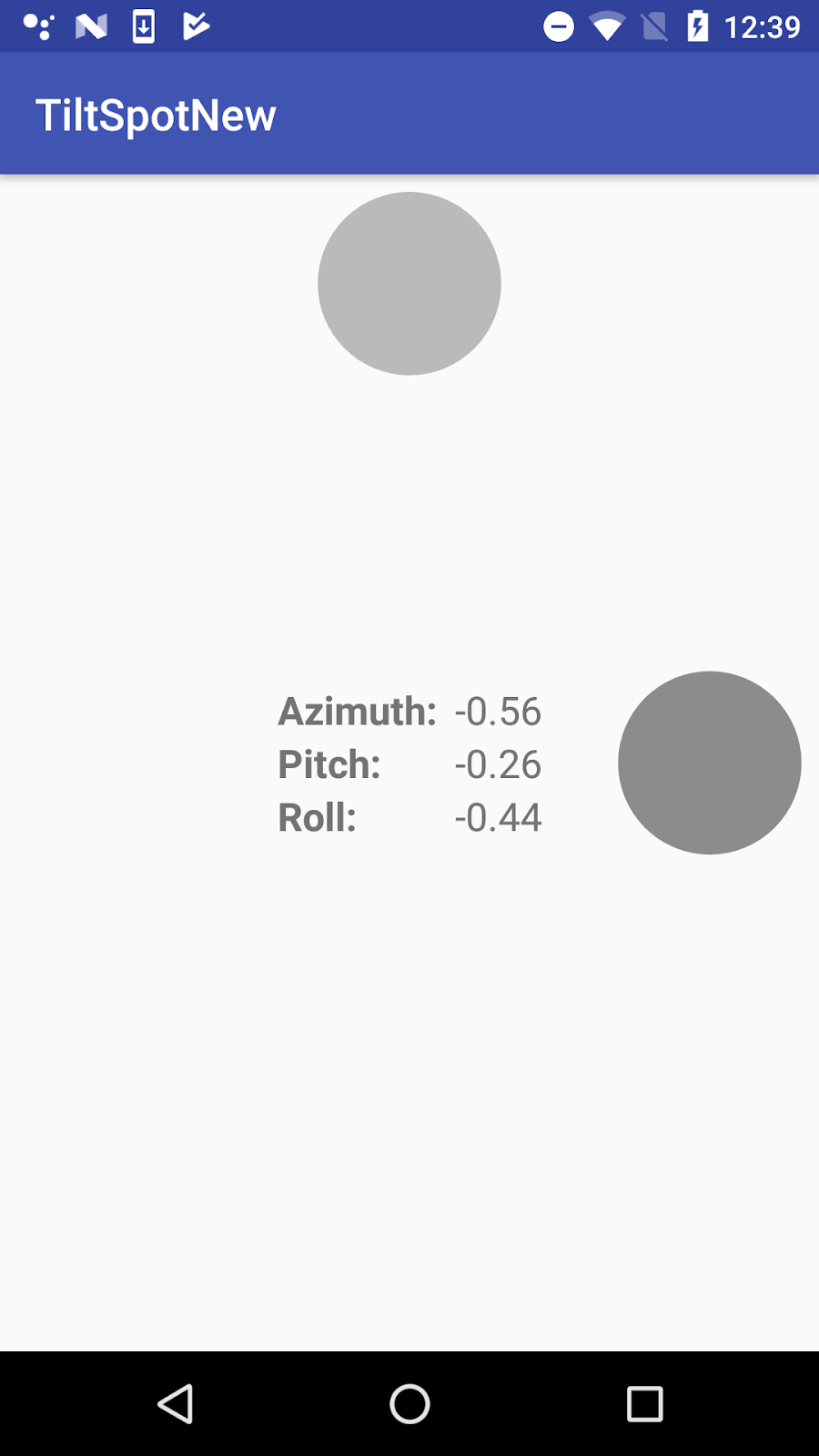

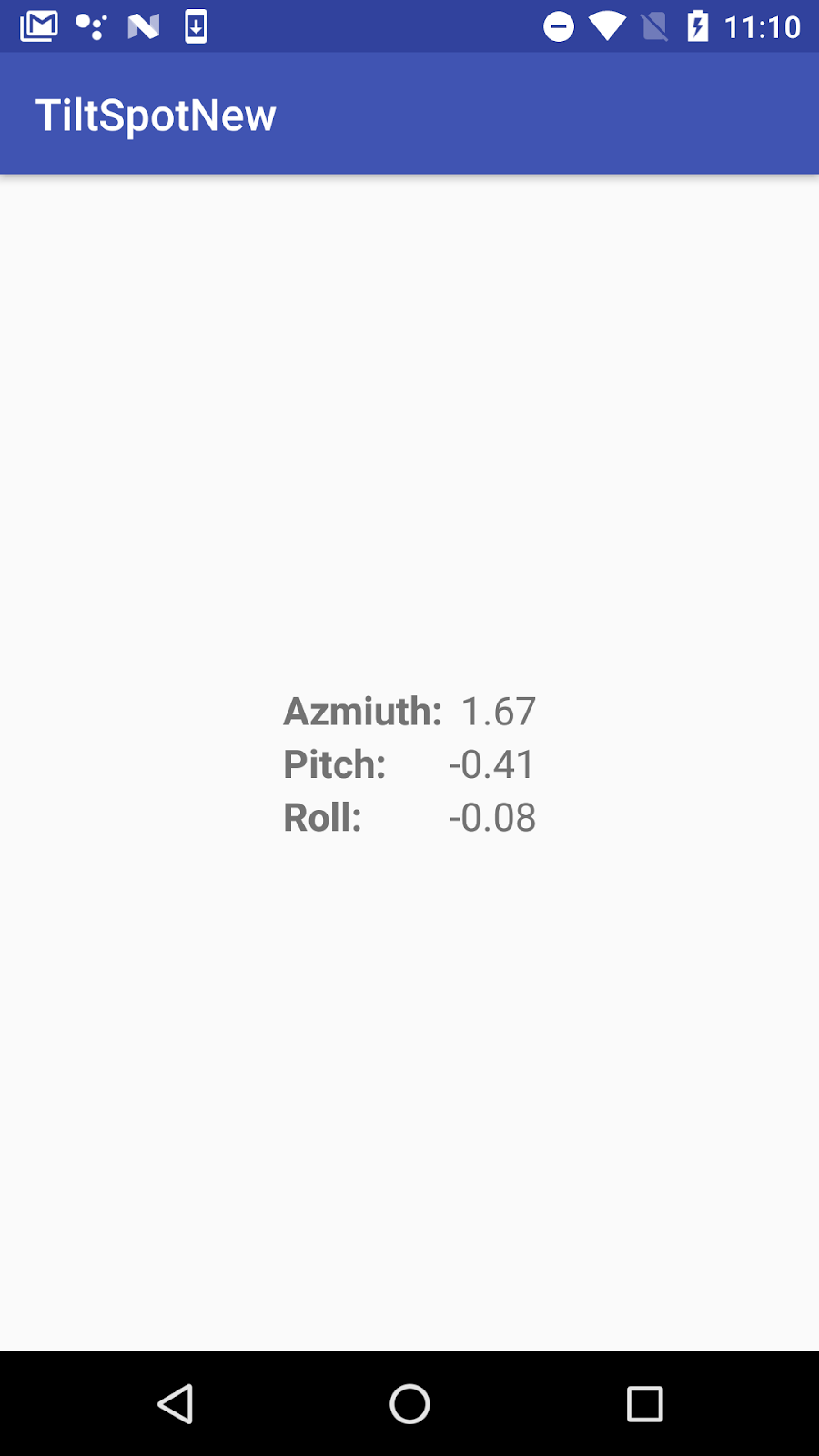

- Run the app. Place your device flat on the table. The output of the app looks something like this:

Even a motionless device shows fluctuating values for the azimuth, pitch, and roll. Note also that even though the device is flat, the values for pitch and roll may not be 0. This is because the device sensors are extremely sensitive and pick up even tiny changes to the environment, both changes in motion and changes in ambient magnetic fields.

- Turn the device on the table from left to right, leaving it flat on the table.

Note how the value of the azimuth changes. An azimuth value of 0 indicates that the device is pointing (roughly) north.

Note that even if the value of the azimuth is 0, the device may not be pointing exactly north. The device magnetometer measures the strength of any magnetic fields, not just that of the Earth. If you are in the presence of other magnetic fields (most electronics emit magnetic fields, including the device itself), the accuracy of the magnetometer may not be exact.

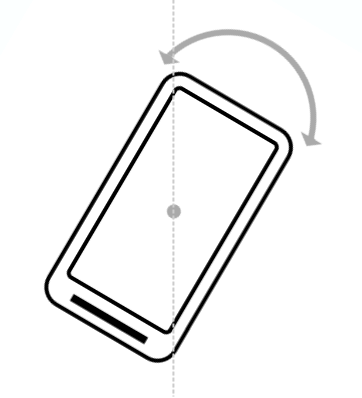

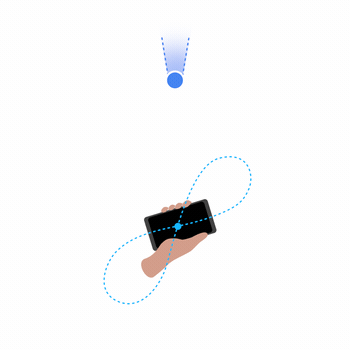

Note: If the azimuth on your device seems very far off from actual north, you can calibrate the magnetometer by waving the device a few times in the air in a figure-eight motion.

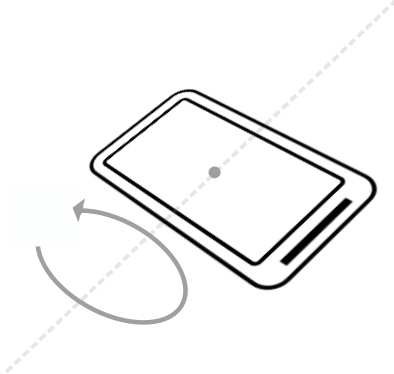

- Lift the bottom edge of the device so the screen is tilted away from you. Note the change to the pitch value. Pitch indicates the top-to-bottom angle of tilt around the device's horizontal axis.

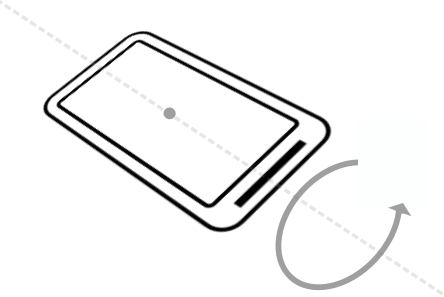

- Lift the left side of the device so that it is tilted to the right. Note the change to the roll value. Roll indicates the left-to-right tilt along the device's vertical axis.

- Pick up the device and tilt it in various directions. Note the changes to the pitch and roll values as the device's tilt changes. What is the maximum value you can find for any tilt direction, and in what device position does that maximum occur?

In this task you update the layout to include spots along each edge of the screen, and change the opaqueness of the spots so that they become darker when a given edge of the screen is tilted up.

The color changes in the spots rely on dynamically changing the alpha value of a shape drawable in response to new sensor data. The alpha determines the opacity of that drawable, so that smaller alpha values produce a lighter shape, and larger values produce a darker shape.

2.1 Add the spots and modify the layout

- Add a new file called

spot.xmlto the project, in theres/drawabledirectory. (Create the directory if needed.) - Replace the selector tag in

spot.xmlwith an oval shape drawable whose color is solid black ("@android:color/black"):

<shape

xmlns:android="http://schemas.android.com/apk/res/android"

android:shape="oval">

<solid android:color="@android:color/black"/>

</shape>- Open

res/values/dimens.xml. Add a dimension for the spot size:

<dimen name="spot_size">84dp</dimen>- In

activity_layout.xml, add anImageViewafter the innerConstraintLayout, and before the outer one. Use these attributes:

Attribute | Value |

android:id | "@+id/spot_top" |

android:layout_width | "@dimen/spot_size" |

android:layout_height | "@dimen/spot_size" |

android:layout_margin | "@dimen/base_margin" |

app:layout_constraintLeft_toLeftOf | "parent" |

app:layout_constraintRight_toRightOf | "parent" |

app:layout_constraintTop_toTopOf | "parent" |

app:srcCompat | "@drawable/spot" |

tools:ignore | "ContentDescription" |

This view places a spot drawable the size of the spot_size dimension at the top edge of the screen. Use the app:srcCompat attribute for a vector drawable in an ImageView (versus android:src for an actual image.) The app:srcCompat attribute is available in the Android Support Library and provides the greatest compatibility for vector drawables.

The tools:ignore attribute is used to suppress warnings in Android Studio about a missing content description. Generally ImageView views need alternate text for sight-impaired users, but this app does not use or require them, so you can suppress the warning here.

- Add the following code below that first

ImageView. This code adds the other three spots along the remaining edges of the screen.

<ImageView

android:id="@+id/spot_bottom"

android:layout_width="@dimen/spot_size"

android:layout_height="@dimen/spot_size"

android:layout_marginBottom="@dimen/base_margin"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintRight_toRightOf="parent"

app:srcCompat="@drawable/spot"

tools:ignore="ContentDescription" />

<ImageView

android:id="@+id/spot_right"

android:layout_width="@dimen/spot_size"

android:layout_height="@dimen/spot_size"

android:layout_marginEnd="@dimen/base_margin"

android:layout_marginRight="@dimen/base_margin"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintRight_toRightOf="parent"

app:layout_constraintTop_toTopOf="parent"

app:srcCompat="@drawable/spot"

tools:ignore="ContentDescription"/>

<ImageView

android:id="@+id/spot_left"

android:layout_width="@dimen/spot_size"

android:layout_height="@dimen/spot_size"

android:layout_marginLeft="@dimen/base_margin"

android:layout_marginStart="@dimen/base_margin"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintTop_toTopOf="parent"

app:srcCompat="@drawable/spot"

tools:ignore="ContentDescription" />The layout preview should now look like this:

- Add the

android:alphaattribute to all fourImageViewelements, and set the value to"0.05". The alpha is the opacity of the shape. Smaller values are less opaque (less visible). Setting the value to 0.05 makes the shape very nearly invisible, but you can still see them in the layout view.

2.2 Update the spot color with new sensor data

Next you modify the onSensorChanged() method to set the alpha value of the spots in response to the pitch and roll values from the sensor data. A higher sensor value indicates a larger degree of tilt. The higher the sensor value, the more opaque (the darker) you make the spot.

- In

MainActivity, add member variables at the top of the class for each of the spotImageViewobjects:

private ImageView mSpotTop;

private ImageView mSpotBottom;

private ImageView mSpotLeft;

private ImageView mSpotRight;- In

onCreate(), just after initializing the text views for the sensor data, initialize the spot views:

mSpotTop = (ImageView) findViewById(R.id.spot_top);

mSpotBottom = (ImageView) findViewById(R.id.spot_bottom);

mSpotLeft = (ImageView) findViewById(R.id.spot_left);

mSpotRight = (ImageView) findViewById(R.id.spot_right);- In

onSensorChanged(), right after the lines that initialize the azimuth, pitch, and roll variables, reset the pitch or roll values that are close to 0 (less than the value of theVALUE_DRIFTconstant) to be 0:

if (Math.abs(pitch) < VALUE_DRIFT) {

pitch = 0;

}

if (Math.abs(roll) < VALUE_DRIFT) {

roll = 0;

}When you initially ran the TiltSpot app, the sensors reported very small non-zero values for the pitch and roll even when the device was flat and stationary. Those small values can cause the app to flash very light-colored spots on all the edges of the screen. In this code if the values are close to 0 (in either the positive or negative direction), you reset them to 0.

- Scroll down to the end of

onSensorChanged(), and add these lines to set the alpha of all the spots to 0. This resets all the spots to be invisible each timeonSensorChanged()is called. This is necessary because sometimes if you tilt the device too quickly, the old values for the spots stick around and retain their darker color. Resetting them each time prevents these artifacts.

mSpotTop.setAlpha(0f);

mSpotBottom.setAlpha(0f);

mSpotLeft.setAlpha(0f);

mSpotRight.setAlpha(0f);- Update the alpha value for the appropriate spot with the values for pitch and roll.

if (pitch > 0) {

mSpotBottom.setAlpha(pitch);

} else {

mSpotTop.setAlpha(Math.abs(pitch));

}

if (roll > 0) {

mSpotLeft.setAlpha(roll);

} else {

mSpotRight.setAlpha(Math.abs(roll));

}Note that the pitch and roll values you calculated in the previous task are in radians, and their values range from -π to +π. Alpha values, on the other hand, range only from 0.0 to 1.0. You could do the math to convert radian units to alpha values, but you may have noted earlier that the higher pitch and roll values only occur when the device is tilted vertical or even upside down. For the TiltSpot app you're only interested in displaying dots in response to some device tilt, not the full range. This means that you can conveniently use the radian units directly as input to the alpha.

- Build and run the app.

You should now be able to tilt the device and have the edge facing "up" display a dot which becomes darker the further up you tilt the device.

The Android system itself uses the accelerometer to determine when the user has turned the device sideways (from portrait to landscape mode, for a phone). The system responds to this rotation by ending the current activity and recreating it in the new orientation, redrawing your activity layout with the "top," "bottom," "left," and "right" edges of the screen now reflecting the new device position.

You may assume that with TiltSpot, if you rotate the device from landscape to portrait, the sensors will report the correct data for the new device orientation, and the spots will continue to appear on the correct edges. That's not the case. When the activity rotates, the activity drawing coordinate system rotates with it, but the sensor coordinate system remains the same. The sensor coordinate system never changes position, regardless of the orientation of the device.

The second tricky point for handling activity rotation is that the default or natural orientation for your device may not be portrait. The default orientation for many tablet devices is landscape. The sensor's coordinate system is always based on the natural orientation of a device.

The TiltSpot starter app included a line in onCreate() to lock the orientation to portrait mode:

setRequestedOrientation (ActivityInfo.SCREEN_ORIENTATION_PORTRAIT);Locking the screen to portrait mode in this way solves one problem—it prevents the coordinate systems from getting out of sync on portrait-default devices. But on landscape-default devices, the technique forces an activity rotation, which causes the device and sensor-coordinate systems to get out of sync.

Here's the right way to handle device and activity rotation in sensor-based drawing: First, use the Display.getRotation() method to query the current device orientation. Then use the SensorManager.remapCoordinateSystem() method to remap the rotation matrix from the sensor data onto the correct axes. This is the technique you use in the TiltSpot app in this task.

The getRotation() method returns one of four integer constants, defined by the Surface class:

ROTATION_0: The default orientation of the device (portrait for phones).ROTATION_90: The "sideways" orientation of the device (landscape for phones). Different devices may report 90 degrees either clockwise or counterclockwise from 0.ROTATION_180: Upside-down orientation, if the device allows it.ROTATION_270: Sideways orientation, in the opposite direction from ROTATION_90.

Note that many devices do not have ROTATION_180 at all or return ROTATION_90 or ROTATION_270 regardless of which direction the device was rotated (clockwise or counterclockwise). It is best to handle all possible rotations rather than to make assumptions for any particular device.

3.1 Get the device rotation and remap the coordinate system

- In

MainActivity, editonCreate()to remove or comment out the call tosetRequestedOrientation().

//setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_PORTRAIT);- In

MainActivity, add a member variable for theDisplayobject.

private Display mDisplay;- At the end of

onCreate(), get a reference to the window manager, and then get the default display. You use the display to get the rotation inonSensorChanged().

WindowManager wm = (WindowManager) getSystemService(WINDOW_SERVICE);

mDisplay = wm.getDefaultDisplay();- In

onSensorChanged(), just after the call togetRotationMatrix(), add a new array of float values to hold the new adjusted rotation matrix.

float[] rotationMatrixAdjusted = new float[9];- Get the current device rotation from the display and add a

switchstatement for that value. Use the rotation constants from theSurfaceclass for each case in the switch. ForROTATION_0, the default orientation, you don't need to remap the coordinates. You can just clone the data in the existing rotation matrix:

switch (mDisplay.getRotation()) {

case Surface.ROTATION_0:

rotationMatrixAdjusted = rotationMatrix.clone();

break;

}- Add additional cases for the other rotations, and call the

SensorManager.remapCoordinateSystem()method for each of these cases.

This method takes as arguments the original rotation matrix, the two new axes on which you want to remap the existing x-axis and y-axis, and an array to populate with the new data. Use the axis constants from the SensorManager class to represent the coordinate system axes.

case Surface.ROTATION_90:

SensorManager.remapCoordinateSystem(rotationMatrix,

SensorManager.AXIS_Y, SensorManager.AXIS_MINUS_X,

rotationMatrixAdjusted);

break;

case Surface.ROTATION_180:

SensorManager.remapCoordinateSystem(rotationMatrix,

SensorManager.AXIS_MINUS_X, SensorManager.AXIS_MINUS_Y,

rotationMatrixAdjusted);

break;

case Surface.ROTATION_270:

SensorManager.remapCoordinateSystem(rotationMatrix,

SensorManager.AXIS_MINUS_Y, SensorManager.AXIS_X,

rotationMatrixAdjusted);

break;- Modify the call to

getOrientation()to use the new adjusted rotation matrix instead of the original matrix.

SensorManager.getOrientation(rotationMatrixAdjusted,

orientationValues);- Build and run the app again. The colors of the spots should now change on the correct edges of the device, regardless of how the device is rotated.

Challenge

A general rule is to avoid doing a lot of work in the onSensorChanged() method, because the method runs on the main thread and may be called many times per second. In particular, the changes to the colors of the spot can look jerky if you're trying to do too much work in onSensorChanged(). Rewrite onSensorChanged() to use an AsyncTask object for all the calculations and updates to views.

- Motion sensors such as the accelerometer measure device movement such as tilt, shake, rotation, or swing.

- Position sensors such as the geomagnetic field sensor (magnetometer) can determine the device's position relative to the Earth.

- The accelerometer measures device acceleration, that is, how much the device is accelerating and in which direction. Acceleration forces on the device include the force of gravity.

- The magnetometer measures the strength of magnetic fields around the device. This includes Earth's magnetic field, although other fields nearby may affect sensor readings.

- You can use combined data from motion and position sensors to determine the device's orientation (its position in space) more accurately than with individual sensors.

- The 3-axis device-coordinate system that most sensors use is relative to the device itself in its default orientation. The y-axis is vertical and points toward the top edge of the device, the x-axis is horizontal and points to the right edge of the device, and the z-axis extends up from the surface of the screen.

- The Earth's coordinate system is relative to the surface of the Earth, with the y-axis pointing to magnetic north, the x-axis 90 degrees from y and pointing east, and the z-axis extending up into space.

- The alpha value determines the opacity of a drawable or view. Lower alpha values indicate more transparency. Use the

setAlpha()method to programmatically change the alpha value for a view. - When Android automatically rotates the activity in response to device orientation, the activity coordinate system also rotates. However, the device-coordinate system that the sensors use remains fixed.

- The default device-coordinate system sensors use is also based on the natural orientation of the device, which may not be "portrait" or "landscape."

- Query the current device orientation with the

Display.getRotation()method. - Use the current device orientation to remap the coordinate system in the right orientation with the

SensorManager.remapCoordinateSystem()method.

Orientation angles describe how far the device is oriented or tilted with respect to the Earth's coordinate system. There are three components to orientation:

- Azimuth: The direction (north/south/east/west) the device is pointing. 0 is magnetic north.

- Pitch: The top-to-bottom tilt of the device. 0 is flat.

- Roll: The left-to-right tilt of the device. 0 is flat.

To determine the orientation of the device:

- Use the

SensorManager.getRotationMatrix()method. The method combines data from the accelerometer and magnetometer and translates the data into the Earth's coordinate system. - Use the

SensorManager.getOrientation()method with a rotation matrix to get the orientation angles of the device.

Android developer documentation:

Android API reference documentation:

Other:

- Accelerometer Basics

- Sensor fusion and motion prediction (written for VR, but many of the basic concepts apply to basic apps as well)

- Android phone orientation overview

- One Screen Turn Deserves Another